- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

Companies are going all-in on artificial intelligence right now, investing millions or even billions into the area while slapping the AI initialism on their products, even when doing so seems strange and pointless.

Heavy investment and increasingly powerful hardware tend to mean more expensive products. To discover if people would be willing to pay extra for hardware with AI capabilities, the question was asked on the TechPowerUp forums.

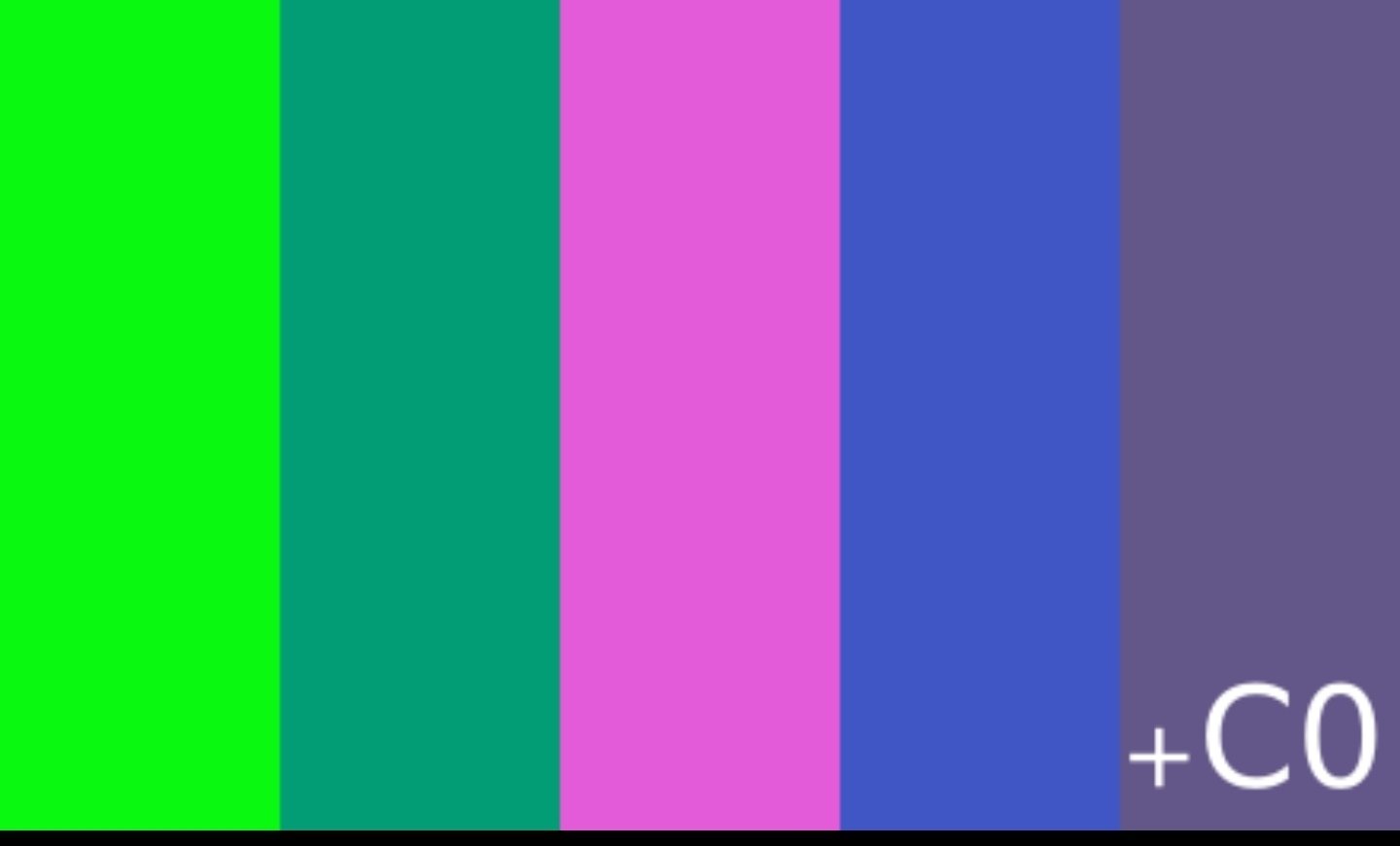

The results show that over 22,000 people, a massive 84% of the overall vote, said no, they would not pay more. More than 2,200 participants said they didn’t know, while just under 2,000 voters said yes.

the question was asked on the TechPowerUp forums.

So this survey is next to worthless, right?

Survey shows most people wouldn’t pay extra

Fuck that. I won’t even buy new hardware at all at this point apart from a flash drive or something else cheap.

Wasn’t there a poll that said the contrary yesterday?!

Personally I agree with this one. I would not pay for the current capabilities “AI” delivers

The only article I saw about this yesterday on Lemmy had the same claim.

Honestly, with my current brain capacity, I may have just misread it… Occam’s Razor and all

AI “enhanced” hardware = slower, bloated, inefficient hardware.

What hardware actually needs an AI? Does my hard drive need its own hard drive and processor to run its AI to do what? So dumb.

And if there are any useful applications, I can probably guarantee it’s got nothing to with actual “artificial intelligence”.

I mean, maybe that computing performance would be useful in the future for things that aren’t just ai shovelware. As long as it progresses making this kind of calculating energy efficient, I don’t have a problem with it.

When MMX first came out, the advertisements didn’t say “a new CPU with vectorized extensions.” They said “MULTIMEDIA!” which happened to be the popular tech buzzword at the time. I think this is a lot of the same. The buzzword is “AI,” not “matrix processing” or whatever an NPU actually does.

While shoehorning AI into every tech product is ridiculous, having a CPU with accelerated data processing features will almost certainly be useful for other workloads in the future.

I’ll admit, I’m not exactly up to speed on what integrated NPUs bring to the table, but I’ve written plenty of code that leverages MMX/AVX instructions for things completely unrelated to multimedia. I expect this will end up being the same.

That’s a good point, but really all that means is it might be of use in a few years once all the hype BS bathwater has washed away and we can see what the baby looks like

Having tortured that analogy, I should probably point out I’m a bit of a Luddite with these things. This is not a sea change I look forward to, in any way, shape or form…

I call bs